About

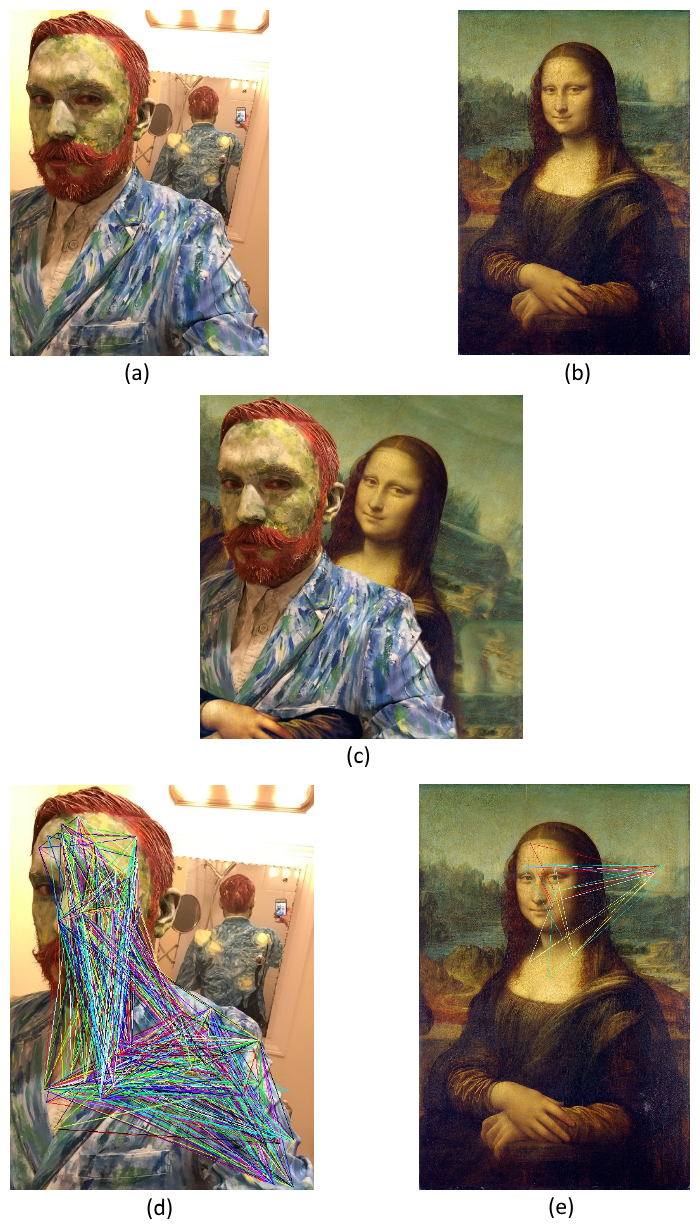

This demo showcases a reverse image search algorithm which performs 2D affine transformation-invariant partial image-matching in sublinear time. The algorithm compares an input image to its database of preprocessed images and determines if the input matches any image in the database. The database need not contain the original image as inputs can be matched to any 2D affine transformation of the original. This means that images which have been scaled (uniformly or non-uniformly), skewed, translated, cropped or rotated (or have undergone any combination of these transformations) can be identified as coming from the same source image (Figure 1).

The algorithm runs in sublinear time with respect to the number of images in the database regardless of the number of transformations applied. Note that if image-matching could not be done in sublinear time it would not function at the scale that the likes of Google or Microsoft require.

If the input is a composite of images or image fragments, the algorithm will return matches for each image/image fragment (Figure 2).

How it Works

All images in the database have been preprocessed in this manner to produce hashes for comparison.

How it Compares to the Competition

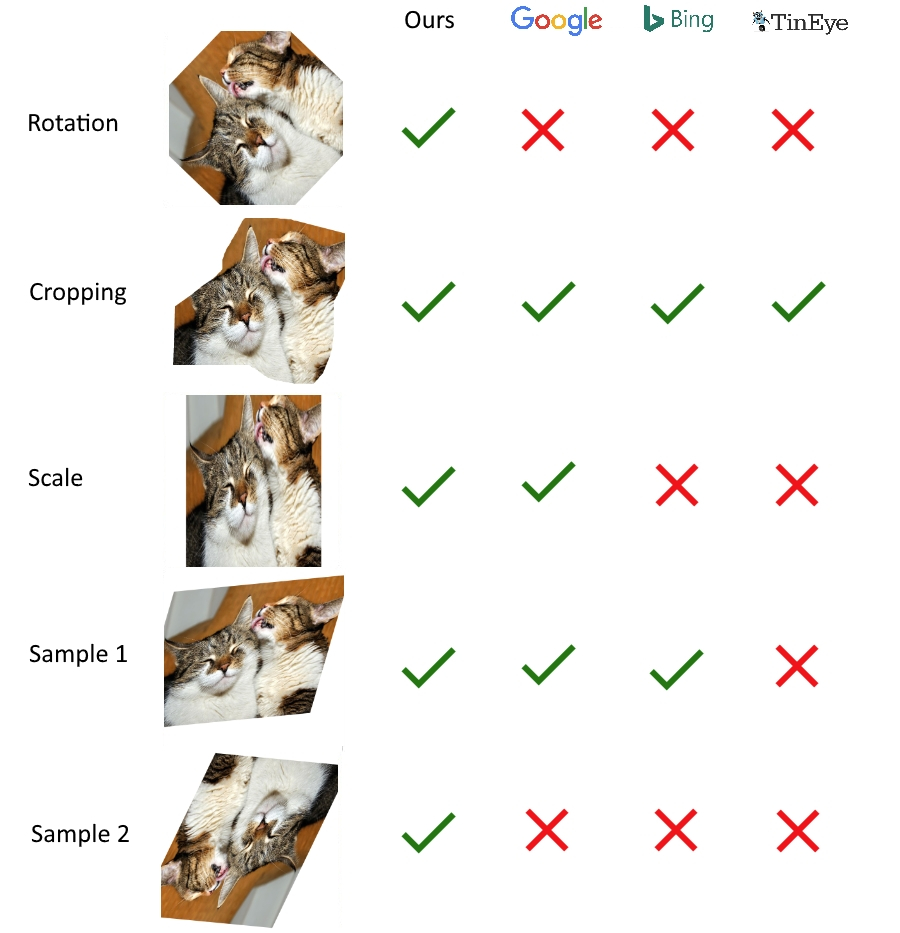

As you can see in Figure 4 below, the algorithm performs better than industry leaders in matching images which have undergone 2D affine transformations. Even the best-performing service, Google Image Search, fails to handle a simple 45 degree rotation.

Market leaders show limited ability to find matches of images which have undergone certain transformations. Our algorithm solves this problem for 2D affine transformations and, if used in conjunction with other modern techniques, offers a significant improvement in reverse-image searching.